thbe blogs

This is the my blog around computer stuff and private things ... enjoy!

Feb 17, 2014

Open source data center, interlude

http://thbe.blogspot.de/2014/01/open-source-data-center-part-1.html

http://thbe.blogspot.de/2014/01/open-source-data-center-part-2.html

http://thbe.blogspot.de/2014/01/open-source-data-center-part-3.html

http://thbe.blogspot.de/2014/01/open-source-data-center-part-4.html

http://thbe.blogspot.de/2014/01/open-source-data-center-part-5.html

The hardware is installed, the first system is half done. Now there should be the part where some virtual machines are pushed to the box controlling the infrastructure and the services.

So far so good, but before I describe this, I would like to talk a bit about developing and testing. Setting up complex scenarios like virtual data centers, even based on infrastructure as code, demands on testing the procedures before deploying it into production. This requires the possibility to reproduce the real setup in a virtual way. I start working with Vagrant (http://www.vagrantup.com/) to test my Puppet modules. Vagrant use VirtualBox to create virtual machines based on a definition file. You can find some examples here. The main problem with Vagrant is, you need quite powerful hardware to use it in scenarios with more than one vm.

More a bit by accident I saw a video on the Puppetlabs channel where Tomas Doran talks about Docker (https://www.docker.io/). Docker is somehow a lightweight version of Vagrant using Linux containers instead of full vms. This restrict the use case to Linux testing but gives you the ability to do this much faster (start a container in less than 5 seconds) and do this with much more complexity on ordinary hardware. I'll shared a beta of my three Docker scenarios (scientific, centos and fedora) on my github account on https://github.com/thbe/virtual-docker. Feel free to extend the examples and push merge requests on github.

Back to the open source data center, I currently think about changing the strategy a bit and use techniques like Docker for demonstration. So it could be, that it take some time before I continue with this blog series, depending on the procedure I'll use to show the further steps.

Jan 31, 2014

Open source data center, part 5

Jan 27, 2014

Open source data center, part 4

- Make administrative work reusable

- Introduce version control to administration

- Ensure a defined state on a server and keep that state

- Generate documentation out of configuration

- Use code checks (syntax and style)

- Can be used with continuous integration

- Reduce time and cost to test

- Reduce time and cost to deliver

- Minimize downtimes

- Introduce rollback features

- Increase the ability to reproduce and fix defects

So, why is this important to this blog series? Quite simple, all components in the open source data center will be deployed and bootstraped using Puppet modules freely available on the net, in this case on the Puppet forge (http://forge.puppetlabs.com/).

Open source data center, part 3

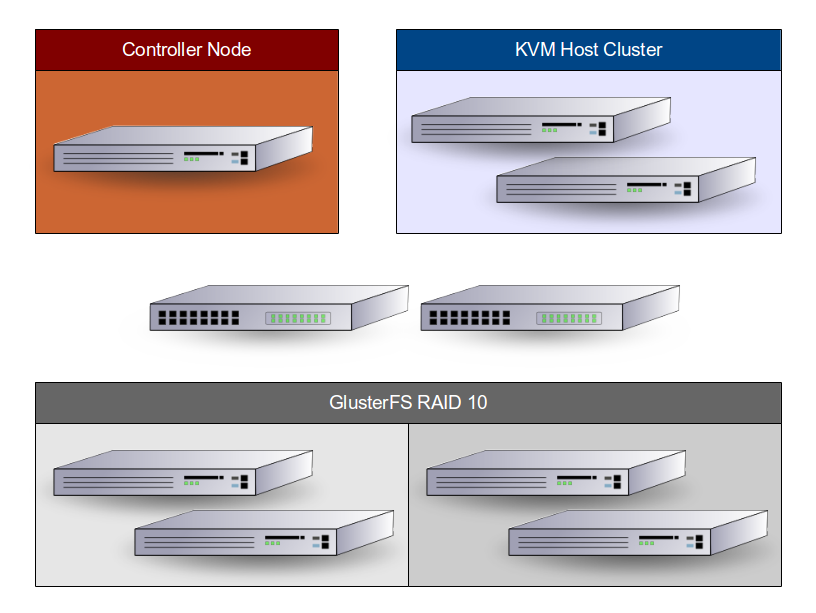

In the open source data center we have three logical units, storage servers, virtual hosts and a controller node. All servers will run with a RHEL clone, in this case with Scientific Linux.CentOS or RHEL itself will also work and, with some modifications in the bootstrap scripts, almost any Linux will be able to server this kind of structure. There is no special reason for using SL except the case that it is enterprise ready and that it is supported for more than ten years.

The storage servers will operate as a network RAID system based on GlusterFS (http://www.gluster.org/). GlusterFS is used i.e. at CERN to store the huge amount of data collected during there experiments. It can scale up to many Peta bytes with standard hardware.

Jan 15, 2014

Open source data center, part 2

- 1 rack

- 2 switches

- 4 storage nodes

- 1 controller node

- 2 host nodes

The rack should be a full one with 40 HEs or more. You can start with smaller ones or even without a rack at all, but again, when it come to scale you have to replace most of your components. The switches should have 48 ports and operate at least at 1GB. It should be possible to remotely manage the switches. Based on the fact that Puppet is used for configuration management, I would recommend a switch that can be configured with Puppet (http://puppetlabs.com/blog/puppet-network-device-management).

The storage nodes are standard hardware as well, they should be able to serve 8 hard discs, so you get the flexibility to establish hybrid storage pool. It is also possible to start with 2 instead of 4 storage nodes, but then you can not start with a cloud RAID 10.

The controller node will contain all services that are needed to manage the data center software. You can realize much of this services in a virtual machine as well, but you will complicate things if one of these controller virtual machines is failing. So moving this to a separate machine can help you. Keep in mind, this one is a single point of failure in the current layout, so you may have to take additional actions to get this node redundant.

Last but not least, you need 2 nodes or more, serving the virtual machines. The most important thing is fast CPU and lot of RAM. The disk is not so important on a clustered host node. If all things go right, the hypervisor is loaded into RAM and the storage is in the SAN so the local disc is only used for booting.

Jan 11, 2014

Open source data center, part 1

In the first part of this blog post series I would like to explain the the general concept of the open source data center. The data center should be easily to deploy in small scale but able to transform to large scale without much effort and most important, without major design changes.

To archive this, the concept will focus on standard hardware components. This will increase the flexibility when it comes to upgrades and lower the overall costs because these components are way cheaper compared to specialized components.

This approach result in the first design decision to build the data center based on clustered components. With this approach it is possible to combine low or mid range performance components to a high performance cluster.

The data center will be split into a storage cluster part, a virtual host cluster part and a management control system for automation.

The next part of this blog series will discuss the hardware that will be used for an initial setup.

Jan 10, 2014

Puppet

After long time of inactivity, it's time to share some informations again. Currently I'm working with Puppet (http://puppetlabs.com/) and several surrounding tools to create a complete, managed datacenter stack. Within the next months, I'll try to compose some blog posts around this topic with more detailed tipps and tricks in how this goal could be reached.

Nov 11, 2012

Ubuntu, the good and the bad

I just experience another episode of the good and the bad, in this case for Ubuntu but not restricted to Ubuntu, in principle it applies to the whole Linux world. Let's start with the bad, I've upgraded my Laptop from Ubuntu 12.04.1 LTS to 12.10. Unfortunately, the upgrade procedure breaks in the middle of the upgrade with an error message like a package has two versions is blocking itself.

The result was a half upgraded system already announcing itself as fully upgraded. Some hours of investigation later I figured out that an old install of skype mixing up x64 und i386 packages caused the problems. Ok, my fault, installing Microsoft Software on a Linux system might not be the smartest idea. Nevertheless, the situation was fixed and I had to deal with it. Simply re-installing the system was not an option because I use an encrypted home drive and I didn't write down the master key (ok, I know, my fault as well).

So I need to fix the system. Fortunately, and now we enter the good, Linux has a central package system giving you the ability to check, remove, re-install the whole system without messing up the configuration. The first action was to remove the blocking package. The next step was to remove all remaining i386 packages (they are only used for a small amount of packages) resulting in a system that wasn't even able to boot. So the next step was to boot the system from a rescue CD, chroot to the original system and re-install all removed i386 packages as amd64 packages again. This took some time and need some rework on the network settings but in the end, I was able to boot my system again. Now I had to finish the upgrade manually, fix some corrupted packages and ... tattata ... my system was back again and upgraded.

To summarize the action, the bad thing is that Linux can also get corrupted, the good thing is, nearly every corrupted status could be transformed into a good working state again with tools available for free at the internet.

Feb 22, 2012

XBMC Eden Live on Zotac Zbox ID41 Plus

The next, much more interesting thing, was the software decision. The first attempt was based on OpenELEC.This one is a media distribution, stripped down to the necessary elements and focused on the ION2 box. If you are seeking for something small and fast where interaction with the underlaying operating system is outside the scope, you will be definitely on the right track. But, this isn't what I'm seeking for. So my second attempt was the XBMC Live distribution. This one was closer to my needs but still requires a lot of work to get a media box which fit my needs. So my last attempt till now is the XMBCbuntu Beta3.

The sound configuration didn't work out-of-the-box. I need to customize it:

Settings --> System --> Audio-Hardware

Output --> Optical/Coaxial

Speaker --> 5.1

AC3 --> deselect

DTS --> deselect

Audio Output --> Custom --> plug:both

Digital Audio Output --> Custom --> plug:both

I don't have a clue why DTS-HD is only working with setting optical/coaxial instead of HDMI what is in the end used for audio transport to the TV, but this is the setting that is in the end working for me.

The other customizations I've done are more or less cosmetic changes which I will describe in later postings.